Architecture and Design — Cache Strategies for Distributed Applications

Cache is an integral part of any application and plays a vital role in enhancing the performance, efficiency, and reliability of systems.

In this article, I would like to walk you through different types of cache types and strategies that can be used based on the defined use case. Let us delve into the details.

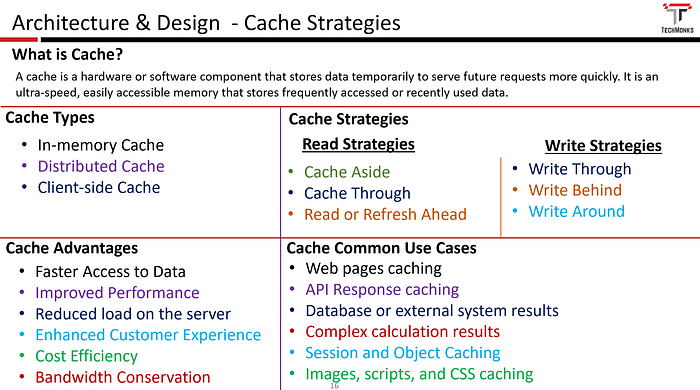

What is the cache, and why do you need it?

A cache is a hardware or software component that stores data temporarily to serve future requests more quickly. It is an ultra-speed, easily accessible memory that stores frequently accessed or recently used data. This enables your applications to serve the user request in milliseconds without having to fetch it from the source whenever it is requested.

Why do you need Cahe?

Below are a couple of reasons to consider cache in your application.

- Faster Access to the Data: Loading the data from the cache is always faster than loading from a source such as a database or remote server

- Improved Performance: By reducing the need to access slower storage or computing resources, caching significantly improves the overall performance of systems.

- Reduce Load on the Server: Caching offloads the server by serving repetitive requests from the cache, reducing the load on the servers or backend systems. This is particularly important in high-traffic scenarios.

- Enhanced Customer Experience: Improved performance enhances the customer experience significantly. Applications can load pages or respond much faster by leveraging the cache.

- Cost Efficiency: Caching reduces the workload on backend servers and databases, leading to cost savings in infrastructure and operational expenses.

- Bandwidth Conservation: Caching conserves bandwidth by serving content locally rather than fetching it from a remote server. This is especially important in scenarios where network bandwidth is limited or expensive.

Common Use cases

Below are a few common use cases where a cache can significantly improve performance.

- Images, scripts, and CSS caching using CDNs

- Database Query Results

- Web Page Caching (static content or frequently accessed pages)

- API Response Caching

- Session and Object Caching

- Complex Computational Results Caching

Before delving into the cache strategies, it is worthwhile to understand the various types of cache mechanisms available.

Cache Types

- In-memory Cache: An in-memory cache is a caching mechanism where data is stored in the system’s random access memory (RAM) for faster access and retrieval. The key characteristic of in-memory caching is that the data is kept in volatile memory, as opposed to being stored on disk or other persistent storage.

- Distributed Cache: A distributed cache is a caching mechanism where data is stored across multiple nodes in a network. Distributed caching allows multiple servers to share the workload of storing and retrieving data, which can improve the performance of the application and reduce the risk of data loss in the event of node failures.

- Client-side Cache: A distributed cache is a caching mechanism where data is stored in the browser or client device. This type of caching is useful for web applications that require frequent access to static resources, such as images and JavaScript files. Client-side caching can significantly improve the performance of a web application by reducing the number of requests made to the server. This can be done by adding the Cache-Control header to the server responses.

Cache Strategies

Below are the cache strategies that we are covering here.

Read Cache Strategies

As the name suggests, read strategies help us read the data from the cache.

- Cache Aside (Lazy Loading)

- Cache-through (Proxy between client and server)

- Read Ahead (Prefetching)

Write Cache Strategies

As the name suggests, write cache strategies help us load the data into the cache.

- Write-Through Cache

- Write-Behind Cache

- Write-Around

Let us go over each strategy to understand the strategies in a detailed way.

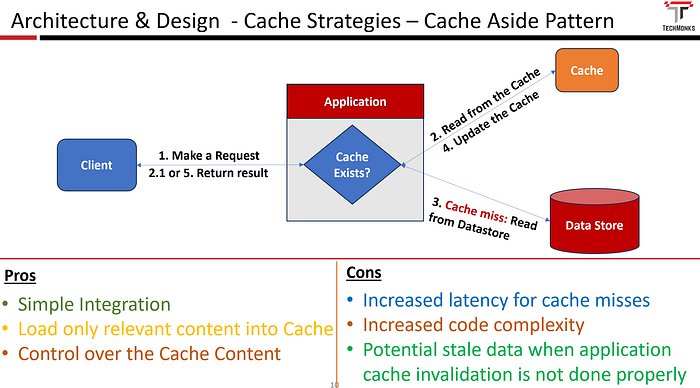

Cache Aside (A.K.A Lazy Loading)

A cache-aside pattern is a caching strategy where the application is responsible for reading the cache and loading the entries into the cache-store.

Implementation Approach

The below diagram depicts the control flow in the cache-aside pattern

- When the request comes, the application code verifies whether the associated response data is available in the cache or not.

- If the cache exists, it returns the data from the cache as a response

- If the data doesn’t exist in the cache, the application code reads it from the data store, loads it into the cache, and sends the response to the client.

Use cases

Below are a few use cases where cache aside patterns shine.

- Read heavy workload use cases like Products displayed in e-commerce applications, Application Configuration or Reference Data, User bookmarks, etc.

- User profiles and preferences.

- Content Delivery Systems and CDNs

- Aggregated Data

- Search Results for popular search phrases

- User Session Data

Advantages

- Simple Integration

- Load only relevant content into the Cache

- Control over Cache Content

Disadvantages

- Potential State data when application code cache invalidation is not implemented properly

- Increased latency for cache misses

- Increased code complexity as the application needs to manage the entire logic

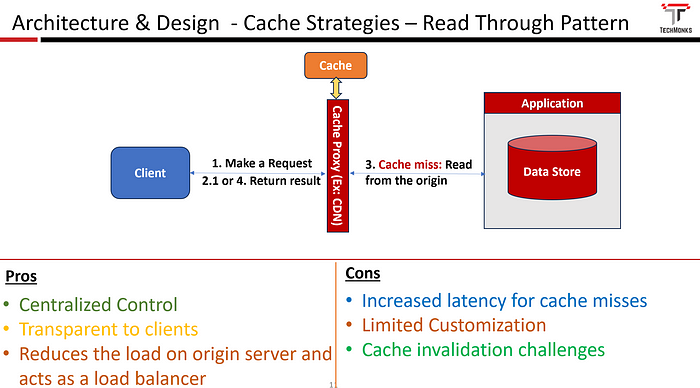

Cache-Through or Read-Through Pattern (A.K.A Proxy)

The Cache-Through pattern, also known as Proxy Cache, involves the use of a proxy or middleware component that sits between the clients (e.g., users or applications) and the data store. The proxy cache intercepts read and write requests, checking if the requested data is present in the cache. If the data is in the cache, it’s returned to the client; otherwise, the proxy fetches the data from the underlying data store, updates the cache, and then returns the data to the client.

The Read-Through pattern is very similar to the Cache Aside pattern. The only difference is that the cache component is in charge of fetching the data from the database instead of the application.

Implementation Approach

- Check whether the cache exists in the cache or not

- if a cache exists, send the response to the client

- if the cache doesn’t exist, the cache server requests the origin and loads the data into the cache

- send the response to the client

Business Use cases

The business use cases mentioned as part of the cache-aside pattern are applicable here as well. It is only your preference which one would be well suited for your needs.

Advantages

- Transparent to clients as they are unaware of the caching happening at the middleware layer, providing a transparent caching solution without requiring changes to client applications.

- Centralized Control: This provides centralized control over the cache policies and allows for uniform and consistent caching strategies, making it easier to manage and enforce caching rules.

- Load Balancing: This enables your application to distribute the load more efficiently across backend servers, preventing individual servers from being overwhelmed with requests.

Disadvantages

- Limited Customization: The proxy cache might provide less flexibility in terms of customizing caching strategies compared to cache-aside patterns, where the application code manages the cache.

- Cache Invalidation Challenges: Implementing effective cache invalidation mechanisms can be complex, particularly in scenarios where the underlying data changes frequently.

- Potential for Stale Data: If cache invalidation is not handled appropriately, there is a risk of serving stale or outdated data to clients.

In practice, a combination of the cache aside pattern and the cache through pattern might be used in a single system to address different requirements. For example, Cache Aside might be suitable for certain read-heavy components, while Cache-Through might be employed at an intermediate layer for transparent caching and load balancing. Please do note that in the case of the CacheThrough pattern, caching happens outside of the application and the application isn’t aware of it. The best examples are your CDN, proxy server, and API Gateway Cache mechanism.

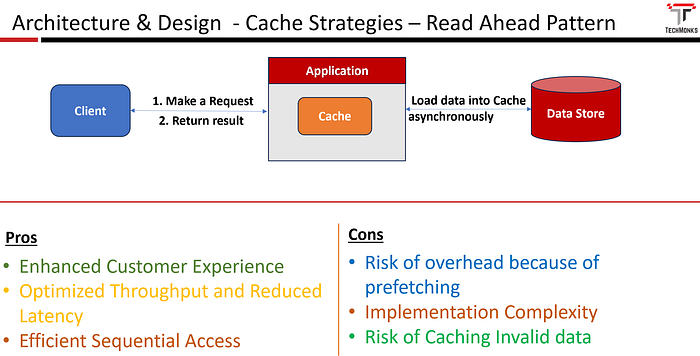

Read-Ahead or Refresh-Ahead Pattern (A.K.A Prefetching Strategy)

The read-ahead cache pattern, also known as prefetching, involves proactively loading data into the cache before it is explicitly requested. The idea is to anticipate future reads and load the relevant data into the cache to reduce latency when the data is later requested.

Implementation Approach

In this approach, data is always served from the cache. i.e., data gets loaded into the cache before its expiration.

- Define a mechanism to determine which data to preload into the cache based on anticipated future reads.

- Load the data into the cache asynchronously based on the configuration defined.

- The client makes a request, and data gets served from the cache.

Business Use cases

- Video and Audio Streaming Services: Video or audio streaming services can use Read-Ahead Caching to prefetch content segments that will likely be requested next, providing a smoother and uninterrupted streaming experience.

- Database Queries with Predictable Patterns: In databases, when there are known access patterns (e.g., certain reports or queries run at specific times), preloading relevant data into the cache can optimize query response times.

- Client-side or browser applications: prefetching web pages or resources that are likely to be accessed based on a user’s browsing behavior, improving the perceived performance of the application.

Advantages

- Enhanced Customer Experience: As this strategy prefetches required resources, applications could serve the requests very quickly and provide a smoother and more responsive user experience.

- Optimized Throughput and Reduced Latency: Read-ahead caching can enhance system throughput by ensuring that data is readily available when needed, reducing the time spent waiting for data retrieval, and reducing the latency significantly.

- Efficient Sequential Access: Particularly effective in scenarios where data is accessed sequentially, such as streaming or reading through large datasets.

Disadvantages

- Risk of Overhead: Prefetching data consumes system resources, and if not managed carefully, it can lead to increased resource usage and potential contention with other processes.

- Implementation Complexity: Implementing an effective read-ahead cache requires careful analysis of access patterns and the development of sophisticated algorithms to predict and prefetch data efficiently.

- Risk of Caching Invalid Data: If your algorithm is not accurate, you end up caching the data that is irrelevant, which leads to a potential waste of resources.

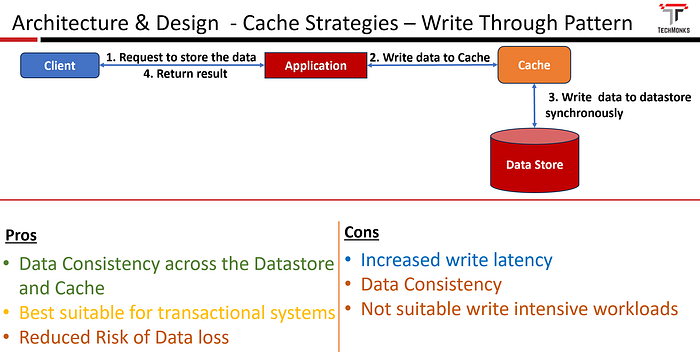

Write-Through Cache

The Write-Through Cache pattern is a caching strategy where write operations update the cache and immediately persist the changes to the underlying data store (e.g., database). In this pattern, the cache and the data store are kept in sync, ensuring that the data in the cache is always consistent with the data in the persistent storage.

Implementation Approach

The implementation approach involves the following steps:

- The client makes a request

- When a write operation is initiated, the data is first updated in the cache.

- Simultaneously, the data is written to the underlying data store.

- return the result to the client

The write operation is considered complete only when both the cache and the data store have been successfully updated.

Business Use cases

- Real-Time Data Processing Applications: Applications that require real-time processing of data, such as financial trading platforms or real-time analytics, can benefit from Write-Through caching to ensure that the latest data is always available.

- Transactional Systems and Data Consistency Requirements: In transactional systems where financial or critical data is involved, maintaining data integrity is paramount. The write-through cache pattern helps ensure that data is consistently updated across both the cache and the data store.

Advantages

- Data Consistency across the Datastore and Cache

- Reduced Risk of Data Loss: Since data is immediately written to the data store, there is a lower risk of data loss in case of a system failure or crash.

- Suitable for Transactional Systems

Disadvantages

- Increased write latency

- Not suitable for write-intensive workloads

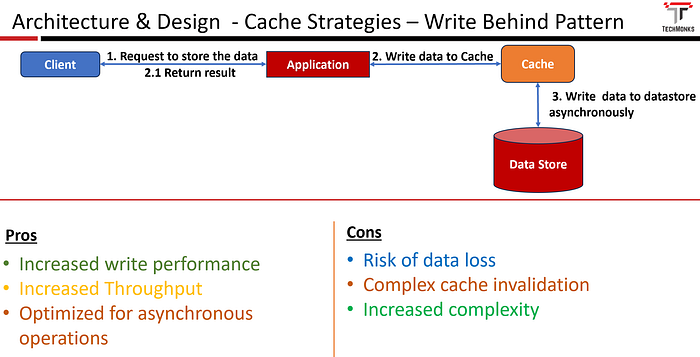

Write-Behind Cache

The Write-Behind Cache pattern is a caching strategy where write operations are initially performed on the cache and the corresponding updates to the underlying data store are deferred or postponed. Instead of immediately updating the data store, the updates are batched and written in a delayed fashion, often in the background or during periods of lower system activity.

Implementation Approach

Steps involved in implementing the Write-Behind Cache

- When a write operation is initiated, the data is first updated in the cache.

- Instead of immediately persisting the changes to the data store, the updates are recorded in a write-behind queue or log.

- Periodically or asynchronously, the updates in the queue are processed, and the changes are written to the underlying data store.

The write-behind strategy is very similar to the write-through approach. The only difference is that write-behind updates the database asynchronously.

Business Use cases

- Asynchronous Data Replication: Write-behind caching can be employed in systems with distributed or replicated data stores. Updates can be asynchronously propagated to replicas or other nodes in the system.

- Write Performance Optimization: Write-behind caching is beneficial in scenarios where optimizing write performance is a priority. By batching and deferring write operations, the system can achieve better overall throughput.

Advantages

- Improved write performance: Write-behind caching improves overall write performance by allowing the system to batch and defer write operations, reducing the impact of immediate writes on system responsiveness.

- Increased Throughput: By batching write operations, the system can achieve higher throughput, especially in scenarios with bursty write workloads.

- Optimized for Asynchronous Operations: Write-behind caching is well-suited for scenarios where asynchronous processing of write updates is acceptable, such as in systems that can tolerate eventual consistency.

Disadvantages

- Risk of Data Loss

- Complex Cache Invalidation

- Increased complexity

Write-behind caching is a trade-off between immediate data consistency and improved write performance.

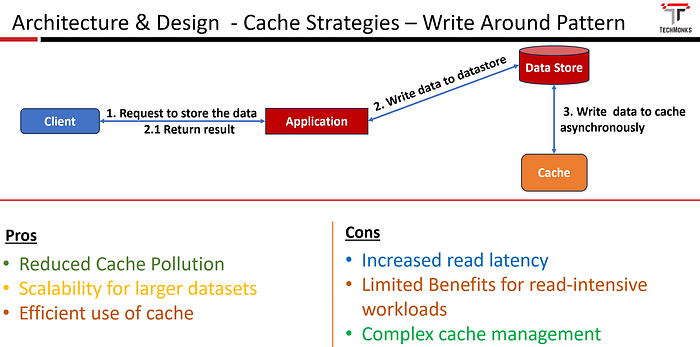

Write Around Cache

The write-around cache pattern is a caching strategy where write operations are written directly to the underlying data store. In this pattern, data is only brought into the cache on read requests, and write operations do not update the cache.

You need to ensure you are moving the cache entries after updating the data in the data store, or you can refresh the data in the cache. asynchronously.

Implementation Approach

Steps involved in implementing the Write-Around Cache.

- When a write operation is initiated, the data is directly written to the underlying data store, bypassing the cache.

- Subsequent read requests may bring the data into the cache, but it is not proactively cached during write operations.

Business Use cases

- Dealing with large datasets: For large data sets that may not fit well in the cache or where caching the entire set is impractical, write-around caching allows selective caching based on actual read access.

- Archival data: In systems where data is archived or stored for compliance reasons but is rarely accessed, write-around caching helps avoid caching data that may not be needed again.

- Logging and audit trails: Write-around caching can be effective for scenarios where logging or audit trails generate large amounts of data that don’t need to be immediately cached but may be accessed for auditing purposes later.

Advantages

- Reduced Cache Pollution

- Scalability for large data sets

- Efficient use of Cache

Disadvantages

- Increased Read Latency

- Limited Benefits for read-intensive workloads

- Complex Cache Management

Write-around caching is a strategy that is particularly beneficial when dealing with large or infrequently accessed data sets. It provides a way to avoid unnecessary cache pollution with data that may not be immediately needed for read operations.

That’s all for today!

Thank you for taking the time to read this article. I hope you have enjoyed it. If you enjoyed it and would like to stay updated on various technology topics, please consider following and subscribing for more insightful content.